Generative AI Hysteria

Dispelling the Hysteria About Generative AI’s Threat to Humanity

After years in leadership positions at some of the largest companies in Silicon Valley, I have witnessed firsthand the transformative power of machine learning and artificial intelligence (AI) and their many benefits. Yet, for much of my career, AI has been little more than a compelling buzzword used as a shorthand for our technology progressively getting smarter. However, a wave of panic has swept across the tech industry regarding the potential dangers of generative AI. In light of the recent articles on the topic, I want to look at some of the concerns raised and assess whether the industry’s reaction is justified or stems from an overblown sense of hysteria.

Hype and Hysteria or Valid Concerns?

A recent report by The Center for Data Innovation contends that the industry’s fear of generative AI is exaggerated. The report emphasizes that instead of overreacting, policymakers should focus on thoughtful regulation that enables innovation without stifling progress. It also maps the historical pattern of panic related to emerging technology, from the advent of the printing press to now. It calls on regulators to recognize that fears about generative AI are part of a predictable cycle.

As AI technology advances, we’ll need a nuanced approach to regulation that acknowledges the potential risks while recognizing the tremendous benefits generative AI can offer. Technologist Marc Andreessen argues that fear-driven narratives often overshadow the positive impact of AI in terms of labor productivity, job creation, and overall economic growth. He stresses the importance of striking a balance by addressing legitimate concerns without succumbing to an alarmist mindset that stifles innovation.

The End of the World (Or Not)

On the other hand, we are seeing warnings from leaders of companies specializing in AI development, including OpenAI, Anthropic, and Google. It’s easy to worry when the people most responsible for driving AI technology forward are predicting a dystopian future where generative AI surpasses human intelligence, leading to the extinction of humanity if left unchecked.

We are a long way from needing to worry about “Skynet” or any of the other dangerous kinds of AI we see in science-fiction media. However, there are absolutely risks associated with AI’s exponential growth, and as a society, we should confront these challenges promptly.

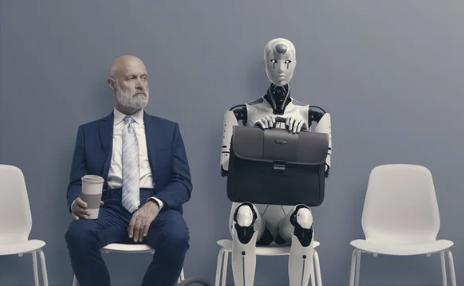

Some of the most immediate and valid concerns are about AI’s potential to create anticompetitive market conditions and displace jobs. We are already seeing evidence of some jobs being lost to AI. Moreover, large companies are more likely than smaller ones to have the resources to implement AI at scale and see a meaningful benefit from doing so. Smaller companies will fall behind these larger competitors if the technology gap gets too wide, reducing competition in the marketplace.

We are still in the early stages of AI development, despite significant advancements in the last several years. This is why it is important to critically evaluate any claims about the threats posed by AI in the context of present-day capabilities and foreseeable developments.

The Path to Responsible AI Development

It’s important to remember that humans are responsible for how advanced AI becomes and what it will be used for. That alone can be cause for concern, as malicious actors have always used new technology for nefarious purposes.

Taking a rational approach to the concerns surrounding generative AI is crucial. Rather than resorting to panic or dismissing the potential risks outright, the tech industry must prioritize responsible development and deployment of AI systems. This entails a multifaceted approach that combines regulatory frameworks, ethical guidelines, and ongoing collaboration between academia, industry, and policymakers. Striking this delicate balance will foster an environment that promotes the growth and safe implementation of generative AI technologies.

Responsible AI development also entails a deep commitment to ethical guidelines. Transparency, accountability, and fairness should be core tenets guiding the creation and use of generative AI systems. Tech companies must prioritize the development of explainable AI models, ensuring that decisions made by AI systems are interpretable and fair. Additionally, adopting ethical frameworks that address bias, privacy, and human rights concerns will further enhance the responsible deployment of AI technologies.

Collaboration and Research:

Continued research and open dialogue will facilitate a better understanding of the challenges at hand and enable the development of realistic solutions. Establishing interdisciplinary partnerships and sharing knowledge across domains will not only help identify potential risks but also foster the creation of robust defenses against those risks.

While the issues raised regarding generative AI are not entirely unfounded, I believe that overreacting to the threat of AI would hinder the remarkable potential that this technology holds for our society. By prioritizing targeted and reasonable regulation, ethical considerations, and transparent, collaborative research efforts, we can navigate the path toward a future where generative AI benefits humanity.

It is natural to be concerned when we enter into uncharted territory. But it is imperative not to let healthy concern become widespread panic and vilification. As AI technology, and technology in general, continues to advance, a balanced and proactive approach will allow us to harness its full potential while safeguarding our collective well-being.